Artificial Intelligence (AI) within the Realm of Cyber Security

IEEE YP Scholar, IEEE Young Professionals (YP), Institute of Electrical and Electronics Engineers (IEEE)

Submitted on 22 June 2024; Accepted on 18 August 2024; Published on 11 September 2024

To cite this article: Z. Bin Akhtar, “Artificial Intelligence (AI) within the Realm of Cyber Security,” Insight. Electr. Electron. Eng., vol. 1, no. 1, pp. 1-11, 2024.

Abstract

The integration of artificial intelligence (AI) in cybersecurity has revolutionized the field, offering advanced solutions for threat detection and mitigation. However, this integration also introduces significant vulnerabilities and threats that need to be thoroughly understood and addressed. This manuscript investigates the critical issues associated with AI in cybersecurity, identifying specific vulnerabilities and the potential exploitation of AI systems by malicious actors. The primary objective of this research exploration is to explore these challenges and provide a systematic review of existing methodologies to mitigate such risks. By addressing these problems, the manuscript contributes to a comprehensive understanding of AI’s dual-edged impact on cybersecurity and proposes strategic solutions to enhance digital security and user privacy. This research aims to bridge the gap in current knowledge, offering insights and recommendations for further developing robust AI-driven cybersecurity frameworks.

Keywords:artificial intelligence; cybersecurity; data science; deep learning; machine learning; security; security vulnerabilities; privacy

Abbreviations:AI: artificial intelligence; SSL: secure sockets layer; NSA: National Security Agency; ML: machine learning; DDoS: denial-of-service; APTs: advanced persistent threats; LLMs: large language models; SSTI: server-side template injection; CSTI: client-side template injection;OSINT: open-source intelligence

1. Introduction

Artificial intelligence (AI) brings several advantages to cybersecurity. It excels at detecting new threats by utilizing sophisticated algorithms to identify malware and ransomware attacks based on behavior patterns. AI systems can provide real-time intelligence on global and industry-specific dangers, helping prioritize security measures effectively [1–3]. AI is also very crucial in combating bots, which constitute a significant portion of internet traffic [4,5]. By analyzing website traffic and discerning between good bots, bad bots, and humans, AI helps cybersecurity teams stay ahead of automated threats.

AI also contributes to breach risk prediction by leveraging IT asset inventory and threat exposure data to identify vulnerable areas and allocate resources accordingly [6–8]. AI plays a vital role in better endpoint protection, particularly in the context of remote work. Instead of relying on signature-based approaches, AI establishes behavioral baselines for endpoints and proactively identifies anomalies, offering advanced protection against emerging threats. AI empowers cybersecurity professionals to detect, prevent, and respond to cyber threats more effectively, keeping pace with the ever-evolving threat in the digital technology landscape [9–11].

AI is transforming the cybersecurity landscape by bringing numerous benefits and advancements to the field. One significant advantage is increased efficiency. AI automates routine tasks, freeing up security analysts to focus on more complex responsibilities like incident response and threat hunting. It also improves the analysis of large volumes of security data, rapidly detecting patterns and anomalies that may indicate cyber threats. AI automation streamlines vulnerability scanning, patch management, and incident investigation, enabling faster and more efficient cybersecurity operations. Another way that AI is changing cybersecurity is through improved accuracy. AI algorithms excel at detecting new and unknown threats, such as emerging malware variants, by analyzing behaviors and patterns instead of relying solely on known signatures. AI can also identify subtle indicators of potential threats in network traffic, providing enhanced accuracy in threat detection. The adaptive nature of AI allows it to continuously learn and refine its models, resulting in improved detection capabilities over time. Cost reduction is another significant impact of AI in cybersecurity. By automating routine tasks, organizations can reduce the workload and associated costs of human resources. AI's ability to improve the accuracy of threat detection helps avoid unnecessary costs related to false alarms or undetected breaches. AI enhances the efficiency of incident response, reducing the time to remediate security incidents and minimizing potential financial losses, reputational damage, and regulatory penalties. Proactive threat intelligence enabled by AI also contributes to cost reduction by preventing and mitigating incidents through timely and actionable insights. Real-time threat detection and response capabilities provided by AI are essential in the fast-paced cyber threat landscape.

AI processes the data rapidly, enabling the identification of suspicious patterns and anomalies in real time. This allows security teams to gain immediate visibility into potential threats and take prompt action. AI's ability to continuously learn and adapt ensures that organizations can proactively defend against emerging threats and respond effectively to minimize the impact of cyber-attacks. Scalability is another significant aspect of AI in cybersecurity. AI algorithms can handle and analyze massive amounts of data, including network traffic logs, system logs, user behaviors, and threat intelligence feeds. This scalability allows organizations to effectively process and detect cyber threats within complex and dynamic environments.

The ability to analyze large datasets efficiently reduces the time required for threat detection and response, optimizing resource allocation and improving operational efficiency. AI is revolutionizing the cybersecurity landscape by increasing efficiency, improving accuracy, reducing costs, enabling real-time threat detection with response, and providing scalability. By leveraging various types of AI-powered solutions alongside human expertise, organizations can effectively protect their digital assets against evolving cyber threats.

2. Methods and Experimental Analysis

This research employs a systematic approach to investigate the impact of AI on cybersecurity and privacy. It begins with a comprehensive background research and available knowledge exploration analysis to gather existing knowledge and identify various research gaps, sourcing and analyzing relevant academic papers, industry reports, and case studies related to AI and cybersecurity. Various data collection methods are utilized, including surveys, interviews with cybersecurity experts, and analysis of existing datasets. The collected data undergo rigorous pre-processing to ensure its quality, relevance, and applicability to the research domains. The research is guided by specific assumptions and research questions formulated to explore the vulnerabilities and threats posed by AI integration in cybersecurity, addressing both the risks and potential solutions offered by AI technologies. The performance of AI techniques in cybersecurity is evaluated using appropriate metrics, such as accuracy, precision, recall, and F1 score. These metrics help in assessing the effectiveness of AI-driven approaches compared to traditional computing methods.

Data visualization tools and techniques are employed to illustrate the findings clearly and effectively, using charts, graphs, and heatmaps to convey complex data insights in an understandable manner. AI-based techniques are compared with traditional cybersecurity methods to highlight the improvements and identify areas where AI can offer significant advantages. This comparison aids in understanding the practical implications of integrating AI into existing cybersecurity frameworks. The results are analyzed in the context of the research objectives, providing insights into how AI impacts cybersecurity and privacy. This includes discussing the implications of the findings for future cybersecurity practices and AI advancements. The research concludes by summarizing the key findings, acknowledging the limitations of the research, and suggesting prospects for future research. Recommendations are made for further exploration of AI applications in cybersecurity and enhancing privacy protections in the digital world. This structured methodology enables a thorough exploration of AI’s role in enhancing cybersecurity and addressing privacy concerns, contributing to the development of robust and secure AI-driven frameworks in the field.

3. Background Research and Available Knowledge

Before we get into all the nitty gritty within the retrospect which complexifies the context, let’s first learn the basics and history of its foundations. Computer security, also known as cybersecurity, digital security, or IT security, is the practice of protecting computer systems and networks from malicious attacks that can lead to unauthorized access, theft, or damage of hardware, software, or data, as well as disruption of services [1–5]. With the increasing reliance on computer systems, the internet, and wireless networks, cybersecurity has become a critical challenge in today's interconnected world. The history of cybersecurity can be traced back to the emergence of the internet and the digital transformation of society [6,7]. In the 1970s and 1980s, computer security primarily focused on academic settings until the advent of the internet, which brought about an increase in connectivity and the rise of computer viruses and network intrusions. The institutionalization of cyber threats and cybersecurity occurred in the 2000s.

The field of computer security was significantly influenced by the April 1967 session organized by Willis Ware at the Spring Joint Computer Conference, known as the Ware Report. This event and subsequent publication marked foundational moments in the history of computer security. The report addressed material, cultural, political, and social concerns related to computer security. In the 1970s and 1980s, computer threats were relatively limited as the technology was still in its early stages, and security breaches were easily identifiable. However, insider threats, such as unauthorized access to sensitive information by malicious insiders, were more prevalent [8–10].

During this time, computer firms like IBM started offering commercial access control systems and security software products. Notable incidents in the history of cybersecurity include the creation of the computer worm Creeper in 1971, the first documented case of cyber espionage performed by German hackers in the late 1980s, and the distribution of the Morris worm in 1988, which gained significant media attention. The development of secure protocols, such as secure sockets layer (SSL), by Netscape in the mid-1990s aimed to enhance the security of online communications. However, even these early versions had vulnerabilities that were later addressed in subsequent releases [11–15]. The role of government agencies, such as the National Security Agency (NSA), in cybersecurity is significant. The NSA is responsible for protecting U.S. information systems and collecting foreign intelligence. The agency analyses software for security flaws, often using them offensively rather than reporting them to software producers for remediation. This approach has led to the exploitation of security vulnerabilities by both allies and adversaries, contributing to the emergence of cyberwarfare capabilities worldwide. The history of cybersecurity reflects the evolution of computer systems, the internet, and the growing threats associated with them.

From the early days of computer viruses and network intrusions to the rise of cyber espionage and the development of secure protocols, the field of cybersecurity has become essential for protecting information systems and mitigating potential risks. The involvement of government agencies and the constant interplay between security measures and emerging threats continue to shape the landscape of cybersecurity [16–22]. The history of AI can be traced back to ancient times when myths and stories depicted the creation of artificial beings with intelligence or consciousness. However, the modern foundations of AI were established by philosophers who sought to understand human thinking as a mechanistic process involving the manipulation of symbols. This line of thinking eventually led to the invention of the programmable digital computer in the 1940s, which sparked the serious exploration of building an electronic brain [23,24].

The field of AI research was officially launched in the summer of 1956 at a workshop held at Dartmouth College. The participants of this workshop, who would become influential figures in AI research, were optimistic about achieving human-level intelligence in machines within a generation. Substantial funding was provided to support their efforts [25,26].

However, as the project progressed, it became evident that the challenges of developing AI were far greater than initially anticipated. Critics, such as James Lighthill, voiced concerns, and the U.S. and British governments responded by ceasing funding for undirected AI research in 1974. This marked the beginning of a timeline period known as the "AI winter," characterized by a decline in AI research and disillusionment with its progress [27–29]. In the early 1980s, the Japanese government initiated a visionary initiative that renewed interest and investment in AI, leading to substantial funding from governments and industry. Moreover, by the late 1980s, investors once again became disillusioned with the progress of AI, and funding was withdrawn. In the first decades of the 21st century, AI experienced a resurgence in its investment and interest [30].

This was possible through advancements in machine learning (ML) techniques, the availability of powerful computer hardware, and the accumulation of vast amounts of data. ML, in particular, demonstrated success in various academic and industrial applications, leading to a renewed optimism and enthusiasm for AI. Overall, the history of AI has been marked by periods of optimism, followed by periods of disappointment and reduced funding. However, recent advancements have sparked a new wave of excitement, with AI becoming increasingly integrated into various aspects of our lives, from personal assistants to autonomous vehicles, and opening up new possibilities for the near future.

4.CyberThreats and the Information Security Domain

Cyber threats have seen a significant increase in recent years, with the proliferation of technology and interconnected systems. The COVID-19 pandemic further accelerated this trend, resulting in a 600% surge in cybercrime since 2020. The impact of cyberattacks is wide-ranging, affecting nearly every industry and leading to major financial losses, reputational damage, legal liabilities, productivity disruptions, and business continuity issues. Estimates indicate that global cybercrime costs could reach $10.5 trillion by 2025, highlighting the severity of the problem.

Data breaches are a prevalent and costly consequence of cyber threats. In 2022, the global average cost of a data breach was $4.35 million, with the United States recording the highest average cost at $9.44 million. The healthcare industry experienced a significant jump in data breach costs, with an average of $10.1 million, reflecting a 42% increase since 2020. Cloud environments were also a common target, accounting for 45% of data breaches in 2022. Various motives drive cyber threats. Cybercrime, committed for financial gain by individuals or groups, is one prevalent motive. Politically motivated cyber-attacks seek to disrupt systems or gather sensitive information, while cyberterrorism aims to undermine electronic systems and impose fear or panic. Malware, a broad category of malicious software, poses a significant threat.

Viruses, Trojans, spyware, adware, botnets, and ransomware are among the different types of malware used by attackers to gain unauthorized access, disrupt operations, or extort victims. Ransomware attacks have grown in prominence, with organizations facing the threat of permanent data loss unless they pay a ransom, often in cryptocurrencies. Phishing attacks, where cybercriminals deceive the victims into divulging sensitive information, are another widespread method used. Other types of cyber threats also include distributed denial-of-service (DDoS) attacks, where a network is overloaded by coordinating a large number of systems; man-in-the-middle attacks, which intercept and steal data during communication; SQL injection, exploiting vulnerabilities in data-driven applications to access sensitive information; insider threats from individuals with authorized access to systems; advanced persistent threats (APTs), infiltrations that remain undetected over an extended period for data theft; and especially cryptojacking, where victims' computing resources are hijacked for cryptocurrency mining. Data security plays a crucial role in combating cyber threats. It encompasses measures to protect data from unauthorized access, corruption, or accidental errors. This technique includes data privacy, encryption techniques such as cryptography and homomorphic encryption, and ensuring data integrity. Addressing cyber threats requires continuous vigilance, robust cybersecurity measures, and proactive strategies. Organizations must invest in cybersecurity infrastructure, employee training, threat detection and response systems, and data protection mechanisms to mitigate risks and safeguard sensitive information in an increasingly interconnected digital landscape.

Cybersecurity is a critical practice aimed at safeguarding electronic systems, networks, computers, mobile devices, programs, and data from malicious digital attacks. It involves the protection of digital information and infrastructure to prevent unauthorized access, data breaches, and disruption of business processes. To achieve cybersecurity, an organization typically implements an infrastructure consisting of three key components: IT security, cyber security, and network security. IT security, also known as electronic information security, focuses on protecting both physical and digital data from intruders. It safeguards data at rest and in transit, ensuring its integrity and confidentiality.

Cyber security is a subset of IT security and specifically focuses on safeguarding digital data on networks, computers, and devices from unauthorized access, attack, and destruction. It involves measures such as firewalls, encryption, intrusion detection systems, and incident response protocols to prevent cyber threats and mitigate their impact. Network security, or computer security, is a subset of cyber security and is concerned with protecting data transmitted through computers and devices in a network. It employs hardware and software solutions to ensure the secure transmission and reception of data, guarding against interception, tampering, and unauthorized access.

In practice, IT security professionals and cyber security professionals often collaborate to protect an organization's data and prevent unauthorized access. While some companies employ separate professionals for IT security and cyber security, the roles may overlap, with cyber security professionals primarily focusing on securing digital data across various networks and systems. It's important to note that cybersecurity is a part of the broader field of information security. Information security encompasses the main protection of data and information and information systems across different realms, including the physical world. As anything occurring in the cyber realm involves the protection of information and systems, information security can be seen as a superset that encompasses cyber security. Cybersecurity plays a crucial role in safeguarding digital assets and ensuring the privacy, integrity, and availability of data in an increasingly interconnected and digitized world [31–33]. It requires proactive measures, ongoing monitoring, and the adoption of robust security practices to mitigate risks and effectively respond to cyber threats. To provide an idea, the figure illustration represents the matter(Figure 1).

FIGURE 1: A diagram of information security (cyber and network security).

5. Cybercrimes and Security Vulnerabilities

According to Forbes, 76% of enterprises have prioritized AI and ML in their IT budgets, driven by the increasing volume of data that needs to be analyzed to identify and mitigate cyber threats. AI is becoming an essential tool in the fight against cybercrime. The rapid acceleration of cybercrime has been facilitated by the lower barrier to entry for malicious actors, who have evolved their business models to include subscription services and starter kits. Additionally, the use of large language models (LLMs) like ChatGPT to write malicious code highlights the potential challenges to cybersecurity. However, it is crucial for business leaders in today's digital world to be knowledgeable about the developments of AI in cybersecurity.

Blackberry's research found that the majority of IT decision-makers plan to invest in AI-driven cybersecurity, recognizing its potential to enhance their defenses against cyber threats. While there are concerns about the misuse of AI, particularly in social engineering and skilling up less experienced hackers, the actual threat posed by AI-generated code may not be as significant as some headlines suggest.

While AI can generate code that gets close to completion, it often requires human intelligence and refinement to make it fully functional. This means that the last mile of human intervention is crucial, reducing the potential threat. It is important to acknowledge that AI can also be used to help protect against cyber threats. AI has the ability to make inferences, recognize patterns, and perform proactive actions to shield against online threats. It can automate incident response, streamline threat hunting, and analyze large amounts of data to improve cybersecurity. AI-powered tools provide continuous monitoring, real-time attack detection, and automation of incident response. They can also assist in identifying false positives and strengthening access control measures. Furthermore, AI can help mitigate insider threats by analyzing user behavior and at the same time identifying employees engaged in malicious activities.

By leveraging AI in cybersecurity, organizations can improve their threat detection, response times, and overall security posture. While there are many benefits to using AI in cybersecurity, there are also potential risks that must be considered. Bias in AI algorithms can lead to flawed decisions or missed threats if the training data is biased or unrepresentative. Addressing bias requires diverse and representative training data, pre-processing techniques, ongoing monitoring, transparency, and continuous education. Attackers can leverage AI technologies to enhance the effectiveness of their cyber-attacks. AI can be used to create highly convincing phishing emails, develop advanced evasion techniques, automate attack tools, facilitate deepfake attacks, and execute adversarial attacks. These malicious uses of AI pose significant challenges for defensive measures and necessitate robust cybersecurity strategies. To be precise, business leaders must recognize the potential dangers and benefits of using AI in cybersecurity. While there are risks associated with the misuse of AI, efforts can be made to address bias and ensure fairness and equity. AI can be harnessed to improve cybersecurity by automating tasks, providing continuous monitoring, enhancing threat detection, and mitigating insider threats.

By embracing AI responsibly, organizations can strengthen their security defenses in the face of evolving cyber threats. AI-powered security solutions, like any software or system, can have vulnerabilities that attackers may exploit. These vulnerabilities can compromise the effectiveness of cybersecurity measures. To mitigate these risks, organizations should regularly assess the security of AI systems through penetration testing and simulations of real-world attacks. Secure development practices should be followed from the early stages, including adhering to coding standards, conducting thorough security assessments, and using secure development frameworks and tools.

Secure deployment and configuration practices are crucial, involving proper access controls, secure storage of sensitive data, and implementation of secure communication protocols. Regular updates and patching should be performed to address known vulnerabilities. Ongoing monitoring, robust logging, and incident response plans are necessary to detect and respond to security incidents promptly. When adopting AI systems from third-party vendors, thorough security evaluations should be conducted to ensure secure development practices and strong security measures.

However, there are challenges to implementing AI in security. Lack of transparency and interpretability is a common issue, as AI systems often function as black boxes, making it challenging to understand how decisions are made. Bias and fairness concerns arise when AI systems replicate biases present in the training data. Integration with existing security systems can be problematic if AI-powered solutions do not effectively work alongside other tools in an organization's security architecture.

To be more accurate, organizations need to address security vulnerabilities in AI systems through regular assessments, secure development practices, proper deployment and configuration, ongoing monitoring, and vendor evaluations. They must also consider challenges such as lack of transparency, bias, and integration with existing security systems when implementing AI in security. By addressing these concerns, organizations can enhance the effectiveness and reliability of their AI-powered security solutions.

Vulnerabilities are weaknesses in a computer system, either in the hardware or software, that compromise the overall security of the entire system. These vulnerabilities can be exploited by threat actors, such as attackers, to gain unauthorized access or perform malicious actions within the system. Vulnerabilities are sometimes also referred to as the attack surface, as they provide opportunities for attackers to breach the system's defenses. Vulnerability management is a cyclical practice aimed at identifying, assessing, and addressing vulnerabilities in computing systems. The process typically involves discovering all assets within a system, prioritizing them based on their criticality, conducting vulnerability scans or assessments, reporting on the findings, remediating the identified vulnerabilities, and verifying the effectiveness of the remediation efforts. This iterative process helps organizations stay proactive in addressing vulnerabilities and minimizing the risk of successful attacks.

It is also very important to differentiate between vulnerabilities and security risks. While vulnerabilities represent potential weaknesses, security risks refer to the potential impact or harm that can result from the exploitation of vulnerabilities. A vulnerability becomes a security risk when there is a significant potential for damage or compromise. However, not all vulnerabilities pose a risk, particularly when the affected asset has no value or the vulnerability is not easily exploitable.

An exploitable vulnerability is one that has known instances of successful attacks. The window of vulnerability refers to the time period starting from when a security hole is introduced or discovered in deployed software until it is patched or mitigated, or when the attacker's access is removed. Zero-day attacks, where vulnerabilities are exploited before a fix is available, represent a particularly challenging type of vulnerability. It is worth noting that vulnerabilities are not limited to software. Hardware, physical site vulnerabilities, or weaknesses in personnel practices can also introduce vulnerabilities in a system. Additionally, certain constructs in programming languages that are complex or difficult to use properly can lead to a very large number of vulnerabilities if not implemented correctly. To put it simply, understanding and managing vulnerabilities is crucial for maintaining the security of computer systems. By actively identifying and addressing vulnerabilities, organizations can enhance their defense against potential attacks and reduce the likelihood of security breaches.

6. The Abuse of AI within Cybersecurity

Cybercriminals are finding ways to exploit AI for their malicious activities. One method is through social engineering schemes, where AI automates the processes and allows for more personalized and sophisticated messaging to deceive victims. This leads to a higher success rate for cybercriminals in carrying out phishing, vishing, and business email compromise scams. Additionally, AI is being used to enhance password hacking algorithms, enabling hackers to decipher passwords more quickly and accurately, emphasizing the need for strong password security measures. Another concerning use of AI by hackers is the creation of deepfakes, which involve manipulating visual or audio content to impersonate individuals and spread deceptive information. Deepfakes can be combined with social engineering, extortion, and other schemes to cause confusion and fear among those who consume the manipulated content. Furthermore, hackers can employ data poisoning techniques to alter the training data of AI algorithms, leading to biased or incorrect decisions. Data poisoning can be difficult to detect and can result in severe consequences by the time it is discovered. In this changing AI environment, individuals and businesses need to review their cybersecurity practices and ensure they follow best practices, especially in areas such as passwords, data privacy, personal cybersecurity, and protection against social engineering. Regularly updating the security measures and always staying informed about the latest cyber-security tips is crucial. While AI offers many benefits in improving cybersecurity, it is important to remain vigilant and adapt security practices to mitigate the risks associated with AI-powered attacks.

One challenge in using AI for cybersecurity is the need for substantial resources and financial investments to build and maintain AI systems effectively. Acquiring diverse and reliable datasets for training AI systems can be time-consuming and costly, making it difficult for many organizations to afford. Inaccurate or incomplete datasets can also lead to incorrect results and false positives, highlighting the great importance of quality data for AI systems to function effectively.

Furthermore, the same AI technologies used for defense can also be leveraged by cybercriminals to analyze their malware and launch more advanced attacks. This highlights the ongoing cat-and-mouse game between cybersecurity professionals and hackers, where advancements in AI technology are utilized on both sides. In other words, while AI has the potential to enhance cybersecurity, it is important to be aware of the ways in which hackers can abuse AI for their malicious purposes.

Implementing robust cybersecurity measures, staying informed about the evolving AI landscape, and adapting security practices accordingly are crucial for individuals and organizations to protect themselves in this changing environment. Malware and phishing attacks are significant cybersecurity threats that can cause substantial harm to individuals and organizations.

However, the advancements in AI have brought new possibilities for detecting and mitigating these threats. AI-based cybersecurity systems have shown promising results in malware detection. Traditional signature-based approaches can only detect known malware, while AI-powered systems can identify dynamically changing malicious agents more effectively. By utilizing techniques like computer vision and neural networks, researchers have achieved high accuracy in detecting malware across various file formats.

AI systems can analyze the inherent characteristics of malware to identify potential threats, improving the over-all security efficiency compared to legacy detection systems. Phishing attacks, which often lead to the activation of malware, can also be combated using AI. ML-based techniques can analyze the structure of emails and classify them as legitimate or phishing emails, achieving high accuracy rates. AI-enabled tools, such as Mimecast CyberGraph, employ ML to block trackers, detect phishing emails, and alert users about potential threats. AI's role in cybersecurity goes beyond malware and phishing detection. It helps in knowledge consolidation by leveraging ML models to retain and utilize vast amounts of historical data to detect security breaches effectively. AI can keep track of global and industry-specific vulnerabilities, constantly updating its knowledge to defend against new threat actors and prevent upcoming attacks.

Tech giants like Google, IBM, and Microsoft have invested significant resources in developing advanced AI systems for threat identification and mitigation, making substantial progress in protecting users and enterprises. Additionally, AI tools can predict breach risks, prioritize security measures, and automate threat detection and mitigation processes. By reducing the time taken to detect and respond to cyber threats, AI contributes to minimizing the damage caused by attacks. It enables organizations to allocate resources more effectively and develop cyber resilience to withstand future attacks.

While AI offers tremendous potential in improving cybersecurity, it also poses certain risks and challenges. Data manipulation, where hackers alter training data or introduce biases, can impact the efficiency of AI models. Hackers themselves can exploit AI techniques to develop intelligent malware that evades detection. Insufficient or biased training data can result in false positives or a false sense of security. Privacy concerns arise when user data is used to train AI models without adequate protection. Moreover, AI systems themselves can become targets of cyber-attacks, with hackers feeding poisonous data to manipulate their behavior.

To address these challenges, it is crucial to build robust infrastructures that counter the risks associated with AI in cybersecurity. Data integrity and privacy protection measures, continuous model updating, and proactive security measures are essential for ensuring the safe and secure operation of AI-powered cybersecurity systems. AI brings significant advancements to malware and phishing detection, knowledge consolidation, threat prediction, and automation in cybersecurity. While there are risks and challenges to overcome, organizations must leverage AI's potential while implementing robust security measures to create a safe digital environment. By combining human expertise with AI capabilities, the cybersecurity landscape can be strengthened to defend against evolving threats and ensure the protection of individuals and businesses.

AI has been adopted by several tech giants and cybersecurity companies to enhance their capabilities in the field. Google has been utilizing ML techniques in Gmail and various other services for years, with deep learning algorithms allowing for independent adjustments and self-regulation. IBM heavily relies on its Watson cognitive learning platform for tasks like knowledge consolidation and threat detection, aiming to automate routine processes in security operations centralized areas. Juniper Networks envisions a future with autonomous networks, leveraging AI, ML, and intent-driven networking. Balbix Security Cloud also uses AI-powered risk predictions and vulnerability management to bolster cyber-security efforts. However, the rise of AI in cybersecurity also presents risks. Adversaries can employ AI and ML techniques to evade defenses and launch more sophisticated attacks. They can target the data used to train security algorithms, manipulate information, or develop mutating malware to avoid detection. It is crucial for organizations to be aware of these downsides and implement safeguards to protect against potential threats.

7. Cybersecurity Vulnerabilities: Case Studies Analysis

Server-side template injection (SSTI) and client-side template injection (CSTI) are significant security vulnerabilities that occur when attackers are able to inject and execute malicious code within template engines used by web applications. SSTI happens when user-provided input is improperly sanitized and subsequently incorporated into server-side templates, which are then executed by the server.

Common server-side templating engines vulnerable to such attacks include Twig, Jinja2, Django, ExpressJS, and Razor. Conversely, CSTI occurs when user input is unsanitized and injected into client-side templates, which are executed by the victim’s browser. Popular client-side templating engines susceptible to CSTI include AngularJS, Vue, Handlebars, and Mustache. An illustrative case of an SSTI attack involved an application allowing users to create email templates using the Twig templating engine. By inserting the test string {{7*7}} into the template, the attackers confirmed the vulnerability when the test email returned the value “49”, indicating that the input was executed by the template engine. This discovery allowed the attackers to exploit Twig’s ‘filter’ function to execute arbitrary system commands, leading to remote code execution under the context of the www-data user. Such an attack can have severe implications, including potential privilege escalation and unauthorized access to internal services.

To prevent SSTI attacks, it is crucial to sanitize user inputs rigorously, ensuring that no malicious code is processed by the template engine. Using template engines with built-in security measures, such as automatic input escaping, strict input validation, and sandboxing, can also mitigate these risks. For CSTI, preventing attacks involves similar measures: properly validating and sanitizing user inputs, adhering to secure coding practices, conducting regular vulnerability assessments, and keeping software updated with the latest security patches. By implementing these protective strategies, developers can significantly reduce the likelihood of both SSTI and CSTI attacks, thereby enhancing the overall security of web applications.

Next, physical social engineering tests involve a team of experts attempting to gain access to buildings and offices to evaluate the security of the infrastructure and employees. These tests are usually conducted with mature cybersecurity clients, with only a few staff members aware of the ongoing test to ensure genuine responses from employees. In a recent engagement, the challenge was to access two different sites, remain unchallenged, and gather additional information by interacting with employees.

Reconnaissance and pretext: Effective reconnaissance or open-source intelligence (OSINT) is crucial for these tests, especially for physical engagements. Tools like Google Maps and LinkedIn were used to gather information about the building layouts and potential entry methods and develop a convincing pretext for why they should be allowed entry. In this case, posing as contractors or consultants due to ongoing building work proved to be an effective pretext.

Initial access: The team targeted a satellite site first, considering it easier to breach. Upon arrival, wearing Hi-Vis vests, they were easily allowed inside by a staff member. Inside, they encountered key card access restrictions but managed to find an unoccupied conference room. Here, they connected laptops to ethernet ports and conducted network scans, even obtaining the corporate Wi-Fi password from staff members who did not question their presence.

Main target: With the initial success boosting their confidence, the team targeted the head office next. Despite initial resistance at the reception, they eventually gained entry by convincing an employee of their legitimate presence. Inside, the lack of a proper sign-in process and the hot-desking environment allowed them to move freely and conduct further network attacks. They managed to collect user hashes and cracked one belonging to a security team member. This led to discovering a domain admin account vulnerable to Kerberoasting, eventually giving them domain administrator credentials and full access to the network.

The team provided several recommendations to enhance security. Recommendations include the following engagements:

Enforce visitor sign-in processes: Ensuring that all visitors follow a strict sign-in process can prevent unauthorized access.

Staff training on social engineering risks: Educating staff about the dangers of social engineering can mitigate the risk of such attacks.

Badge security: Avoid revealing badge details on social media to prevent attackers from creating fake badges.

Monitor all entrances: Tailgating from non-monitored entrances like smoking areas can be prevented by accounting for all entry points.

Network security measures: Implementing MAC filtering for ethernet connections and securing Wi-Fi access points can prevent unauthorized network access.

These measures can significantly bolster the physical and cybersecurity posture of an organization, making it harder for social engineering attacks to succeed.

8. Results and Findings

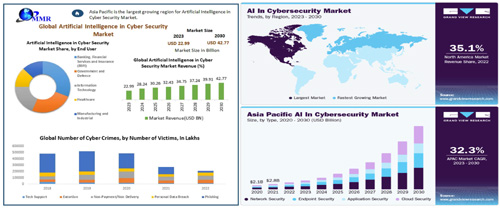

The integration of AI into cybersecurity is rapidly becoming a critical area of concern, particularly regarding privacy and security measures. This heightened interest is driven by significant advancements in internet technologies and the increased networking capabilities between device peripherals, which facilitate vast and rapid data flows. These developments necessitate a deeper understanding of how AI impacts cybersecurity and the measures required to safeguard sensitive information. This research provides detailed visual analytical illustrations, as depicted within figures (Figures2–7), to enhance understanding of this complex topic. These figures range from conceptual frameworks for AI applications in cybersecurity to detailed security analytics based on the research context. Each figure is designed to offer insights into various aspects of AI integration, illustrating both the potential benefits and the associated risks.

The rapid advancements in AI technology continue to significantly influence digital systems, making our lives increasingly dependent on technical computing. This shift underscores the importance of robust cybersecurity measures. As AI evolves, it reshapes our approach to cybersecurity, necessitating continuous adaptation and innovation to address emerging threats effectively.

The expansion of AI's reach is expected to bring about profound changes in various sectors, with cybersecurity remaining a paramount concern. As AI systems become more sophisticated, they offer enhanced capabilities for threat detection and response but also introduce new vulnerabilities that must be carefully managed. This dual-edged nature of AI highlights the need for ongoing research and development to balance the benefits of AI with the imperative to protect privacy and security.

The integration of AI into cybersecurity presents both opportunities and challenges. The visualizations provided in this research aim to elucidate these dynamics, offering a comprehensive overview of AI's role in enhancing cybersecurity measures. By addressing these critical issues, this research contributes to the development of more secure and resilient digital systems, ensuring that the benefits of AI can be realized while safeguarding against potential threats.

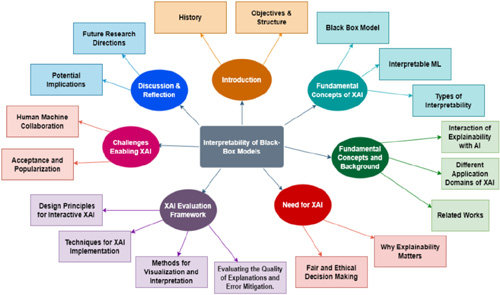

FIGURE 2: A diagram for the conceptual framework of XAI applications in cyber security (Black box models).

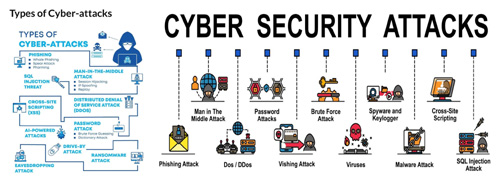

FIGURE 3: An overview of some of the most common types of cyber-attacks.

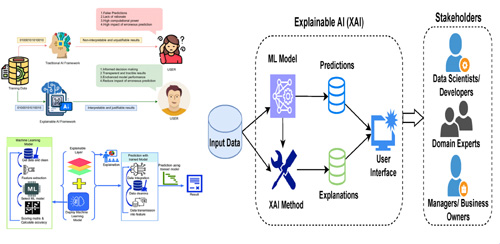

FIGURE 4: An overview of the AI and XAI-user's perspective context.

FIGURE 5: A visualization of use cases for AI in cybersecurity with the most dangerous threats.

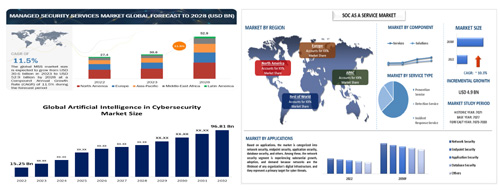

FIGURE 6: AI for cyber security analytics - an industrial international view.

FIGURE 7: AI for cyber security analytics - a global view.

9. Discussion and Future Directions

While AI brings tremendous benefits to the cybersecurity landscape, such as improving threat detection and automating processes, it is essential to remain vigilant and address the challenges associated with its use. Organizations must ensure data integrity, prevent data manipulation, and gather sufficient high-quality data for accurate AI system performance.

By taking all these precautions, they can harness the power of AI while mitigating potential risks and bolstering their overall cybersecurity posture. Implementing AI in security requires careful consideration of best practices to ensure effectiveness and mitigate risks.

Organizations should develop a well-defined AI strategy that aligns their AI deployment with their security challenges and integrates it into their existing security architecture and processes. Data quality and privacy are crucial aspects, as AI relies on accurate and reliable data. Organizations should ensure data integrity and privacy protections when using AI systems. Additionally, building an ethical framework is important to address potential biases and fairness concerns in AI models, particularly when making decisions that impact individuals. Regular testing and updating of AI models are necessary to adapt to the evolving threat landscape and maintain optimal performance. Looking toward the future, AI's role in security is expected to expand. Advancements in AI and ML technologies will enhance their utility and potential security applications.

The integration of AI with emerging technologies like 5G and IoT holds promise for improved security capabilities. The impact of AI on the security industry and job market is also anticipated, with AI automating repetitive tasks and enabling human operators to focus on partnering with AI systems to enhance security at scale. Check Point, a cybersecurity solutions provider already incorporates AI into its offerings to enhance threat prevention. Check Point Horizon XDR/XPR is an example of an AI-driven security solution. Interested individuals can sign up for the early availability program to learn more about Horizon XDR/XPR and its utilization of AI for security purposes. By embracing these best practices and leveraging AI effectively, organizations can bolster their cybersecurity defenses and stay ahead of evolving threats.

The increasing use of AI in cybersecurity presents a transformative opportunity to strengthen security measures and defend against evolving cyber threats. AI offers capabilities such as real-time threat detection, analysis of large volumes of data, and automation of tasks, which can significantly enhance the effectiveness and efficiency of cybersecurity efforts.

By leveraging AI, organizations can detect and respond to threats more effectively, form powerful human-machine partnerships, and continuously adapt to new types of threats. However, it is crucial to understand the associated risks and at the same time implement appropriate measures to mitigate them. As cyber threats continue to significantly rise in high volume and complexity, integrating AI into cybersecurity strategies becomes increasingly important for maintaining robust security in the digital landscape. The ongoing cat-and-mouse game between hackers and cybersecurity professionals necessitates the adoption of advanced technologies like AI to stay ahead of malicious actors.

In today's rapidly advancing world, technological innovations have significantly accelerated the capabilities of computing machinery, making it crucial to stay updated with the latest technologies. Our daily lives are increasingly governed by machine-driven processes and programmed instructions, facilitated by continuous data flows. While this technological progress offers ease of access, comfort, and reliable remote functionality, it also poses potential threats if not properly managed.

On one hand, AI can greatly enhance the cybersecurity landscape, providing advanced tools for protecting sensitive information and detecting threats. However, without adequate oversight and control, the same AI technologies could be exploited, turning these advancements into serious risks. Ensuring the responsible use of AI in cybersecurity requires establishing proper guidelines and leveraging expert insights to maintain a delicate balance between innovation and security.

AI has the potential to transform the cyber world into a safer, more efficient environment for humans. Conversely, the misuse of AI could lead to conflicts and disasters, undermining the very security it aims to enhance. Preventing such scenarios is a collective responsibility that falls on all stakeholders, including researchers, developers, policymakers, and end-users. As human beings, we must actively engage in ensuring that AI technologies are developed and deployed responsibly, safeguarding the benefits while mitigating the risks. While AI holds the promise of significantly improving cybersecurity, it is imperative to maintain vigilant oversight and control. By doing so, we can harness the positive aspects of AI while preventing its potential misuse, ensuring a secure and balanced digital future.

10. Conclusion

In the modern era of technological advancements, our civilization is rapidly progressing towards a new frontier of accelerated computing. In the coming years, AI is poised to reshape the world, introducing new dimensions of efficiency and capability across various sectors. The cybersecurity landscape, in particular, is undergoing significant transformations due to AI integration, offering both opportunities and challenges. The involvement of AI in cybersecurity brings about substantial benefits, such as enhanced threat detection, automated responses to security incidents, and improved predictive analytics. However, it is crucial to acknowledge the potential risks associated with AI misuse. Despite the positive intentions behind AI development, there will inevitably be individuals and entities who seek to exploit these technologies, leading to increased privacy and security vulnerabilities.

As machines become smarter, it is imperative for society to evolve and adapt to these technological changes. This evolution involves not only embracing the advancements AI offers but also addressing the ethical and security implications of its use. Ensuring the responsible deployment of AI in cybersecurity requires the establishment of robust guidelines and regulatory frameworks. These measures are essential to mitigate the risks of AI abuse and to safeguard the privacy and security of individuals and organizations. The dual-edged nature of AI means that, while it can significantly enhance our cybersecurity capabilities, it also has the potential to create new avenues for cyber threats if not properly managed. Therefore, it falls upon us, as a society, to develop and implement mechanisms that maximize the benefits of AI while minimizing its risks. This involves continuous research, collaboration among stakeholders, and the development of best practices that promote the ethical use of AI.

As AI continues to advance and integrate into the cybersecurity landscape, it is essential to balance innovation with security. By establishing comprehensive guidelines and fostering a culture of responsible AI usage, we can ensure that these technological advancements benefit all of humanity, creating a safer and more secure digital environment.

Author Contributions

The idea representation with the research focuses along with the context concerning the investigative exploration and manuscript writing was done by the author himself. All the datasets, data models, data materials, data information, and computing toolsets used and retrieved for the conduction of this research are mentioned within the manuscript and acknowledged with their associated references where appropriate.

Funding

No funding was provided for the conduction of this research.

Conflict of Interests

There is no conflict of interest or any type of competing interest for this research.

Consent for Publication

The author has read and approved the manuscript and has agreed to its publication.

Availability of Data and Materials

The various original data models and datasets of which are not all publicly available, because they contain private information. The available platform provided datasets and data models that support the findings and information of the research investigations are referenced where appropriate.

References

-

N. Perlroth, “How the U.S. Lost to Hackers,” The New York Times, 2021.

-

MSSP Alert. (2017 Jul. 24). Multi-Vector Attacks Demand Multi-Vector Protection.

-

Case Western Reserve University. (2015). Identifying Phishing Attempts.

-

H. Lebo, “The UCLA Internet Report: Surveying the Digital Future,” 2000.

-

J. Haugeland, Artificial Intelligence: The Very Idea, Cambridge: The MIT Press, 1989.

-

A. M. Turing, “Computing Machinery and Intelligence,” Mind, Vol. 59, no. 236, pp. 433-460, Oct 1950.

-

J. Vijayan. (2022, Jun. 28). New Vulnerability Database Catalogs Cloud Security Issues.

-

D. Harley. (2015, Mar. 10). Operating System Vulnerabilities, Exploits and Insecurity.

-

ScienceDaily. (2019, Feb. 25). Most laptops vulnerable to attack via peripheral devices.